2023-12-14: I’ve heavily updated this to work with Astro 4.0 but for posterity I’ve saved the old version in the Internet Archive.

There’s something depressing about a blog about blogging. I never wanted this to be one of those blogs. But I’ve struggled so much with Astro’s image feature these past few weeks that I really hope it will benefit others and amount to a bit more than one over-engineered RSS feed.

First step, “fixing” lazy loading

Before we get into RSS, I want to introduce Astro’s image feature by looking at a simpler problem: lazy loading.

Astro very helpfully lets you reference images from a Markdown file using

a relative path.

In other words, you can have a Markdown file

src/content/posts/blog-a-log/index.md and write:

Look at this picture:

Astro will find the picture at src/content/posts/blog-a-log/picture.jpg,

copy it to your dist/assets folder, generate a WebP version, then create an

<img> tag in your generated HTML complete with width and height attributes

for reducing

CLS badness.

That way your images can live happily alongside their corresponding Markdown

files.

Awesome.

But Astro also takes the liberty of slapping

loading="lazy"

on every single <img> tag.

That’s not awesome because:

-

If the image is “above the fold” it will hurt your LCP score.

-

It can be distracting to read blogs with

loading="lazy"since you can see the images load as you scroll through the page. -

It’s annoying when you enter a tunnel on the train and can no longer see the images in the rest of the article.

How can you fix it? According to the Astro issue tracker, you can’t fix it from Markdown. Instead you have to make your own image service.

That sounds really hard but it turns out to be quite simple.

For example, if you’re already using the sharp image service you can wrap it like so:

import sharpImageService from 'astro/assets/services/sharp';

export const nonLazySharpServiceConfig = () => ({

entrypoint: 'src/utils/non-lazy-sharp-service',

config: {},

});

/**

* A fork of the sharp image service that drops the loading="lazy"

* attribute from outputted image tags since it can hurt LCP scores (and is

* generally annoying when scrolling the page).

*/

const nonLazySharpService = {

validateOptions: sharpImageService.validateOptions,

getURL: sharpImageService.getURL,

parseURL: sharpImageService.parseURL,

getHTMLAttributes: (options, serviceOptions) => {

const result = sharpImageService.getHTMLAttributes(options, serviceOptions);

delete result.loading;

return result;

},

transform: sharpImageService.transform,

};

export default nonLazySharpService;

Drop that in, e.g. src/utils/non-lazy-sharp-service.js, and include it in

astro.config.js in place of sharpImageService() and you’re done.

Eager loading all around.

If you get errors like the following:

No loader is configured for ".node" files: node_modules/sharp/build/Release/sharp-linux-x64.node

you’ll also need to exclude the sharp binary from the bundle by adding something like the following to your vite config:

optimizeDeps: { exclude: ['sharp'] },

The hard bit: RSS

Now onto the hard problem. I had set up a pretty solid RSS feed using Astro’s RSS feature because, if you’re setting up a blog, apparently you should have an RSS feed.

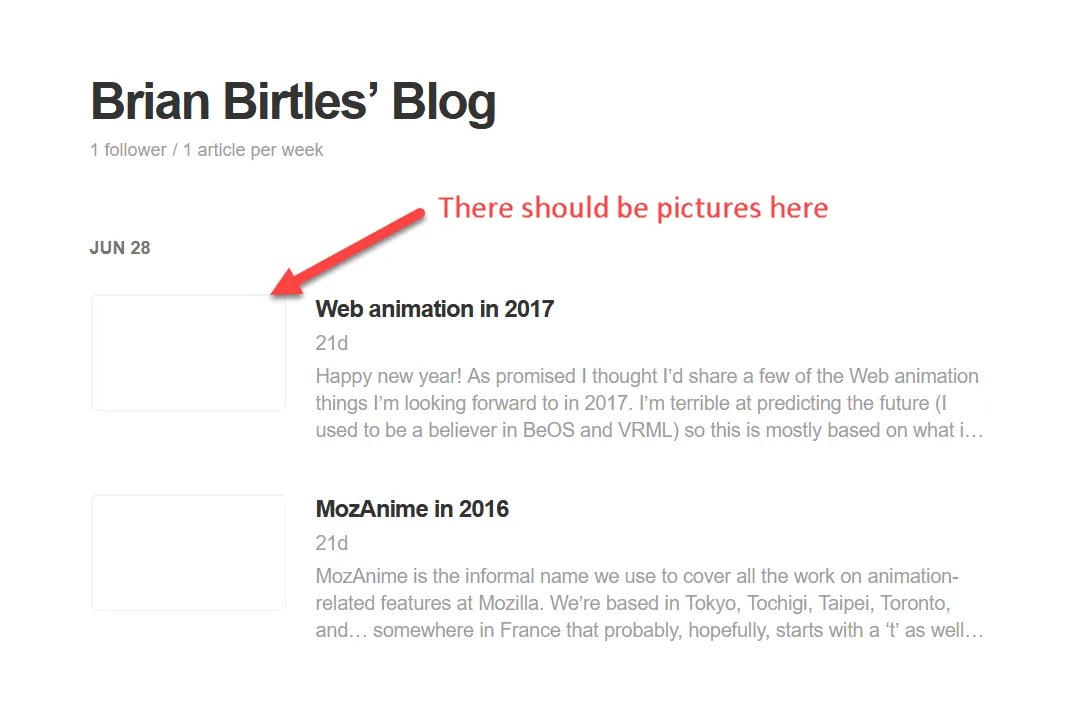

I’ve never used an RSS feed reader but I like the idea. I decided to double-check my awesome RSS feed with Feedly only to be devastated by the lack of pretty pictures.

Check out that follower count too.

Inspecting the source of the RSS feed itself it turns out all the images were

still using the relative path from the source file, i.e. ./picture.jpg from

above.

So “all we need to do” is adjust those paths to the final resolved paths.

How card could it possibly be?

(Spoiler: very very hard)

It turns out images in Astro go on quite the journey from being announced in Markdown to finally appearing in HTML ready for showtime.

From Markdown to optimized HTML

Astro is built on vite. Before encountering this problem I’d been trying my hardest to not know what vite is so, if you’re like me, all you really need to know is vite is like webpack but built on rollup, which is also like webpack.

That last point is important because as you’re cruising the Astro source code

you’ll see things like this.resolve() and will surely wonder where on earth

they’re defined.

It turns this.resolve() and a whole lot of other functions attached to this

are part of the Rollup plugin

API.

With that background out of the way, the journey from Markdown image to optimized HTML image goes something like this:

-

Astro registers a vite plugin called

vite-plugin-markdownwhich handles any Markdown files it encounters. -

vite-plugin-markdownparses the Markdown using@astrojs/markdown-remarkwhich basically wraps up theremarklibrary and configures a bunch of plugins. -

The

remarkCollectImagesplugin extracts all the relative image paths that should be optimized and stores them in the Markdown virtual file metadata. -

After converting the Markdown to HTML another plugin,

rehypeImages, looks for any<img>elements whosesrcmatches one of the paths we found in the previous step. For any matching<img>s it drops thesrcattribute and adds an__ASTRO_IMAGE__one in its place with the same value. -

Back in

vite-plugin-markdownwe read back all the image paths from the third step and resolve them using Rollup’sresolveAPI turning./picture.jpginto/home/me/blog/src/content/posts/blog-a-log/picture.jpg. -

Finally, we export a big template string of TypeScript code in place of the Markdown file that:

-

Includes a map of image paths from the relative image paths to their fully resolved paths.

-

Takes the HTML we generated previously and runs a regex on it to replace all those

__ASTRO_IMAGE__attributes with the corresponding resolved path from the map of image paths.

-

That’s the overview, but we glossed over a lot of details with regard to the image path map in the second last bullet point.

The template string to generate the map looks like this:

return `

import { getImage } from "astro:assets";

${imagePaths

.map(

(entry) =>

`import Astro__${entry.safeName} from ${JSON.stringify(entry.raw)};`

)

.join('\n')}

const images = async function() {

return {

${imagePaths

.map(

(entry) =>

`"${entry.raw}": await getImage({src: Astro__${entry.safeName}})`

)

.join(',\n')}

}

}

//...

`;

The mix of executed code and generated code here is a bit of a mind-bender but there are two important points.

Firstly, the

import Astro__${entry.safeName} from ${JSON.stringify(entry.raw)};

part hides the fact that this is triggering yet another vite plugin.

This time it’s the

vite-plugin-assets

plugin.

By importing an image using ESM we arrive at the astro:assets:esm section of

that plugin which calls

emitESMImage.

emitESMImage emits the file using Rollup’s

emitFile API and

returns the image metadata like the width and height as well as a handle to

the image in the form __ASTRO_ASSET_IMAGE__${handle}__.

In effect, this takes something like

/home/me/blog/src/content/posts/blog-a-log/picture.jpg and returns a handle

like __ASTRO_ASSET_IMAGE__6cf702f7__ where 6cf702f7 is the referenceId

returned by emitFile.

Then, in the

renderChunk hook of

the same plugin, it finds the __ASTRO_ASSET_IMAGE__6cf702f7__ handle,

extracts the referenceId 6cf702f7, looks it up using Rollup’s

this.getFileName

and gets back assets/picture.b5a66e4a.jpg.

Secondly, we pass the metadata returned from emitESMImage including the

now-resolved src (i.e. assets/picture.b5a66e4a.jpg) along to

getImage.

getImage calls our image service which calls

addStaticImage

from vite-plugin-assets to ensure that our WebP image gets generated.

addStaticImage adds its own hash to the resulting file producing something

like /assets/picture.b5a66e4a_13mnAp.webp which getImage forwards on.

Getting the resolved path in our RSS feed

So, how do we get from ./picture.jpg to /assets/picture.b5a66e4a_13mnAp.webp

in our RSS feed?

Summarising the above, there are roughly four steps:

-

Get all the suitable relative images from the Markdown file (e.g.

./picture.jpg). -

Resolve the absolute path on disk for each (e.g.

/home/me/blog/src/content/posts/blog-a-log/picture.jpg). -

Emit the file as part of our Rollup build producing, e.g.,

assets/picture.b5a66e4a.jpg. -

Get the path of the optimized version of the file (e.g.

assets/picture.b5a66e4a_13mnAp.webp).

Pretty straightforward except step 3. Step 3 is trouble.

Step 1: Collecting the relative images

In order to include an HTML version of our posts in the RSS feed we need to do our own Markdown processing.

The

Astro docs

suggest using

markdown-it or similar for this.

I decided to use remark so that I can

re-use plugins between parsing Markdown for regular display and for generating

HTML previews.

Doing that means we can re-use Astro’s remark plugin for extracting relative images like so:

import { remarkCollectImages } from '@astrojs/markdown-remark';

// ...

// Using a singleton parser improves performance _dramatically_

const postParser = unified()

.use(remarkParse)

.use(remarkCollectImages)

.use(remarkRehype)

.use(rehypeSanitize)

.use(rehypeStringify);

// When generating each RSSItem we do:

const content = await postParser.process({ path, value: post.body });

Note that although Remark will accept the markdown content (post.body

above) by itself, we also need to pass the path of the Markdown file too or

else remarkCollectImages will skip it.

Step 2: Resolving the absolute path on disk

Astro resolves relative paths with the following code:

(await this.resolve(imagePath, id))?.id ??

path.join(path.dirname(id), imagePath);

The first part uses Rollup’s

this.resolve

which ends up running any plugins registered for the resolveId hook.

In Astro’s case, that includes the configAliasVitePlugin

plugin

which picks up your tsconfig.json and applies any aliases from the

paths section

but fortunately Astro 3

dropped

the alias it added there so we can ignore that plugin.

Instead, we can just add another plugin that operates on the HTML—a rehype plugin—that picks up the extracted image references and locates them on disk like so:

export function rehypeAstroImages(options) {

return async function (tree, file) {

if (

!file.path ||

!(file.data.imagePaths instanceof Set) ||

!file.data.imagePaths?.size

) {

return;

}

const imageNodes = [];

const imagesToResolve = new Map();

visit(tree, (node) => {

if (

node.type !== 'element' ||

node.tagName !== 'img' ||

typeof node.properties?.src !== 'string' ||

!node.properties?.src ||

!file.data.imagePaths.has(node.properties.src)

) {

return;

}

if (imagesToResolve.has(node.properties.src)) {

imageNodes.push(node);

return;

}

const absolutePath = path.resolve(

path.dirname(file.path),

node.properties.src

);

if (!fs.existsSync(absolutePath)) {

return;

}

imageNodes.push(node);

imagesToResolve.set(node.properties.src, absolutePath);

});

// ... to be continued

};

}

Step 3: Getting the path to the asset produced by Rollup

This is where it gets hard.

It seems like we could just call

this.emitFile, grab

the referenceId then look it up with

this.getFileName.

But that requires having a Rollup plugin context to work with.

Many parts of the Astro codebase do this sort of thing.

emitESMImage itself takes this.emitFile as an argument.

Many other places take a plugin context as an argument.

But where do we get a plugin context from in the middle of generating our RSS?

This is where I get stuck and I hope someone reading this blog can tell me an easy way to do this. I fear the right way might involve creating yet another vite plugin and exporting TypeScript template strings and other messy business so for now I’ve implemented the hacky way: shadow what Rollup does very poorly and hope it’s enough.

Specifically, we want to shadow how Rollup goes from <absolute path>/picture.jpg to assets/picture.<hash>.jpg.

First the hash. Rollup has a function

getXxhash

which calls into

xxhashBase64Url

that boils down to the following Rust code:

use base64::{engine::general_purpose, Engine as _};

use xxhash_rust::xxh3::xxh3_128;

pub fn xxhash_base64_url(input: &[u8]) -> String {

let hash = xxh3_128(input).to_le_bytes();

general_purpose::URL_SAFE_NO_PAD.encode(hash)

}

It’s using the XXH3_128 variant of the xxHash algorithm

and encoding into base64.

Unfortunately none of the Node implementations of xxHash I could find support

the 128-bit variant but fortunately Rollup publishes a WASM version of its

binaries under

@rollup/wasm-node.

As a result we can install @rollup/wasm-node and import the hashing function

as follows:

import { xxhashBase64Url } from '@rollup/wasm-node/dist/wasm-node/bindings_wasm.js';

That will generate a rather long hash but Rollup only uses a part of it in the filename. In fact, the filename generation is quite involved…

function generateAssetFileName(

name: string | undefined,

source: string | Uint8Array,

sourceHash: string,

outputOptions: NormalizedOutputOptions,

bundle: OutputBundleWithPlaceholders

): string {

const emittedName = outputOptions.sanitizeFileName(name || 'asset');

return makeUnique(

renderNamePattern(

typeof outputOptions.assetFileNames === 'function'

? outputOptions.assetFileNames({ name, source, type: 'asset' })

: outputOptions.assetFileNames,

'output.assetFileNames',

{

ext: () => extname(emittedName).slice(1),

extname: () => extname(emittedName),

hash: (size) =>

sourceHash.slice(0, Math.max(0, size || defaultHashSize)),

name: () =>

emittedName.slice(

0,

Math.max(0, emittedName.length - extname(emittedName).length)

),

}

),

bundle

);

}

Looking at the important bits here:

-

sanitizeFileNameis only dealing with drive letters and things so hopefully we can ignore that 👍 -

makeUniqueensures the filename doesn’t clash but since we’re including a bit of hash in the filename hopefully we’re ok 🤞 -

renderNamePatternis problematic. It implements the asset format defined byoutput.assetFileNameswhich we’re likely to want to override 😬In fact, my Astro config already does:

export default defineConfig({

// ...

vite: {

build: {

rollupOptions: {

output: {

assetFileNames: (assetInfo) => {

if (assetInfo.name === 'style.css') {

return 'css/styles.[hash][extname]';

}

return 'assets/[name].[hash][extname]';

},

},

},

},

},

});

Now, unfortunately, as far as I can tell Astro doesn’t provide any easy way to

access configuration values and as someone else

discovered, simplying

importing astro.config.js no longer works either.

You can access the config using an integration but if you try loading the same integration when generating RSS you’ll be disappointed to learn that it’s executing in a different context so you can’t share the config you gleaned from the integration API.

At this point, the simplest thing is just to move the vite config to a separate

file, vite.config.js so it can be imported separately from both

astro.config.js and our rehype plugin.

With our output.assetFileNames option finally available, we can proceed to

fork Rollup’s generateAssetFileName into just the parts we need.

We don’t need most of the validation code since presumably that’s going to be

run when we generate the actual asset.

Putting it altogether we have something like this:

import * as fs from 'node:fs';

import * as path from 'node:path';

import { xxhashBase64Url } from '@rollup/wasm-node/dist/wasm-node/bindings_wasm.js';

/**

* @param {string} absolutePath

* @param {Buffer} data

* @param {string=} assetsDir

* @param {ViteConfig=} viteConfig

*/

function getImageAssetFileName(absolutePath, data, assetsDir, viteConfig) {

const source = new Uint8Array(data);

const sourceHash = getImageHash(source);

if (Array.isArray(viteConfig?.build?.rollupOptions?.output)) {

throw new Error("We don't know how to handle multiple output options 😬");

}

// Defaults to _astro

//

// https://docs.astro.build/en/reference/configuration-reference/#buildassets

assetsDir = assetsDir || '_astro';

// Defaults to `${settings.config.build.assets}/[name].[hash][extname]`

//

// https://github.com/withastro/astro/blob/astro%404.0.3/packages/astro/src/core/build/static-build.ts#L208C22-L208C78

const assetFileNames =

viteConfig?.build?.rollupOptions?.output?.assetFileNames ||

`${assetsDir}/[name].[hash][extname]`;

return generateAssetFileName(

path.basename(absolutePath),

source,

sourceHash,

assetFileNames

);

}

function getImageHash(imageSource) {

return xxhashBase64Url(imageSource).slice(0, 8);

}

function generateAssetFileName(name, source, sourceHash, assetFileNames) {

const defaultHashSize = 8;

return renderNamePattern(

typeof assetFileNames === 'function'

? assetFileNames({ name, source, type: 'asset' })

: assetFileNames,

{

ext: () => path.extname(name).slice(1),

extname: () => path.extname(name),

hash: (size) => sourceHash.slice(0, Math.max(0, size || defaultHashSize)),

name: () =>

name.slice(0, Math.max(0, name.length - path.extname(name).length)),

}

);

}

function renderNamePattern(pattern, replacements) {

return pattern.replace(/\[(\w+)(:\d+)?]/g, (_match, type, size) =>

replacements[type](size && Number.parseInt(size.slice(1)))

);

}

Step 4: Getting the path to the optimized asset

Now that we have the asset name that Rollup is (hopefully) going to produce,

getting the path to the optimized asset is easy since Astro helpfully provides

the

getImage

function

for this.

Continuing our rehype plugin, we can resolve the references as follows:

import { getImage } from 'astro:assets';

import { imageMetadata } from 'astro/assets/utils';

// ...

const imagePromises = [];

for (const [relativePath, absolutePath] of imagesToResolve.entries()) {

imagePromises.push(

fs.promises

.readFile(absolutePath)

.then(

(buffer) =>

/** @type Promise<[ImageMetadata, Buffer]> */

new Promise((resolve) => {

imageMetadata(buffer).then((meta) => {

resolve([meta, buffer]);

});

})

)

.then(([meta, buffer]) => {

if (!meta) {

throw new Error(`Failed to get metadata for image ${relativePath}`);

}

const fileUrl = url.pathToFileURL(absolutePath);

fileUrl.searchParams.append('origWidth', meta.width.toString());

fileUrl.searchParams.append('origHeight', meta.height.toString());

fileUrl.searchParams.append('origFormat', meta.format);

const assetPath =

'/@fs' +

absolutize(url.fileURLToPath(fileUrl) + fileUrl.search).replace(

/\\/g,

'/'

);

return getImage({ src: { ...meta, src: assetPath } });

})

.then((image) => [

relativePath,

{ src: image.src, attributes: image.attributes },

])

);

}

// Process the result

const resolvedImages = new Map();

for (const result of await Promise.allSettled(imagePromises)) {

if (result.status === 'fulfilled') {

resolvedImages.set(...result.value);

} else {

console.warn('Failed to resolve image', result.reason);

}

}

for (const node of imageNodes) {

const imageDetails = resolvedImages.get(node.properties.src);

if (imageDetails) {

const { src: resolvedSrc, attributes } = imageDetails;

if (options.rootUrl) {

node.properties.src = new URL(resolvedSrc, options.rootUrl).toString();

} else {

node.properties.src = absolutize(resolvedSrc);

}

node.properties.width = attributes.width;

node.properties.height = attributes.height;

}

}

function absolutize(path) {

return !path.startsWith('/') ? `/${path}` : path;

}

Epilogue: Ship it!

We did it! Having finally tamed Astro Assets I thought it was time I unleashed my abomination on the world as a brand new drop-in package. I spent half a day setting up the repo and npm package “just right”, popped into into my blog and fired up a build only to get…

Error [ERR_UNSUPPORTED_ESM_URL_SCHEME]: Only URLs with a scheme

in: file and data are supported by the default ESM loader.

Received protocol 'astro:'

It turns out all these fancy vite plugins and virtual modules in Astro aren’t

applied to code under node_modules?

I tried to brute force my way past the first error but next it was

virtual:image-service

that was not reachable.

I tried to persuade Rollup to do its thing for my module too but with no luck.

By this point I have to conclude that all this code is just not the Astro way to do things. The correct approach probably involves a bundle of vite plugins writing Typescript template string incantations but I’ve spent way too much on this problem by now so in lieu of an npm package I have a gist.

I look forward to someone reviewing my code and telling me all the places I did it wrong!

Unfortunately, it looks like Feedly only ever fetches a post once so it doesn’t matter that I fixed my old posts—Feedly users will see the original broken version for eternity 😅

I’m glad to see I’m not the only one struggling with this. I took a different path to you (import aliases added another layer of bullshit to my journey), ended up with about twice as much code and it still only works locally, not when I try to deploy through Netlify or Vercel. I wonder what the ‘Astro way’ would be for this? I appreciate the write-up anway.